Calculus, Probability, and Statistics Primers, Continued

Conditional Expectation (OPTIONAL)

In this lesson, we are going to continue our exploration of conditional expectation and look at several cool applications. This lesson will likely be the toughest one for the foreseeable future, but don't panic!

Conditional Expectation Recap

Let's revisit the conditional expectation of $Y$ given $X = x$. The definition of this expectation is as follows:

For example, suppose $f(x,y) 21x^2y / 4$ for $x^2 \leq y \leq 1$. Then, by definition:

We calculated the marginal pdf, $f_X(x)$, previously, as the integral of $f(x,y)$ over all possible values of $y \in [x^2, 1]$. We can plug in $f_X(x)$ and $f(x, y)$ below:

Given $f(y|x)$, we can now compute $E[Y | X = x]$:

We adjust the limits of integration to match the limits of $y$:

Now, complete the integration:

Double Expectations

We just looked at the expected value of $Y$ given a particular value $X = x$. Now we are going to average the expected value of $Y$ over all values of $X$. In other words, we are going to take the average expected value of all the conditional expected values, which will give us the overall population average for $Y$.

The theorem of double expectations states that the expected value of the expected value of $Y$ given $X$ is the expected value of $Y$. In other words:

Let's look at $E[Y|X]$. We can use the formula that we used to calculate $E[Y|X=x]$ to find $E[Y|X]$, replacing $x$ with $X$. Let's look back at our conditional expectation from the previous slide:

If we set $X = X$, we get the following expression:

What does this mean? $E[Y|X]$ is itself a random variable that is a function of the random variable $X$. Let's call this function $h$:

We now have to calculate $E[h(X)]$, which we can accomplish using the definition of LOTUS:

Let's substitute in for $h(x)$ and $h(X)$:

Remember the definition for $E[Y|X = x]$:

Thus:

We can rearrange the right-hand side. Note that we can move $y$ outside of the first integral since it is a constant value when we integrate with respect to $dx$:

Remember now the definition for the conditional pdf:

We can substitute in $f(x,y)$ for $f(y|x)f_X(x)$:

Let's remember the definition for the marginal pdf of $Y$:

Let's substitute:

Of course, the expected value of $Y$, $E[Y]$ equals:

Thus:

Example

Let's apply this theorem using our favorite joint pdf: $f(x,y) = 21x^2y / 4, x^2 \leq y \leq 1$. Through previous examples, we know $f_X(x)$, $f_Y(y)$ and $E[Y|x]$:

We are going to look at two ways to compute $E[Y]$. First, we can just use the definition of expected value and integrate the product $yF_Y(y)dy$ over the real line:

Now, let's calculate $E[Y]$ using the double expectation theorem we just learned:

Mean of the Geometric Distribution

In this application, we are going to see how we can use double expectation to calculate the mean of a geometric distribution.

Let $Y$ equal the number of coin flips before a head, $H$, appears, where $P(H) = p$. Thus, $Y$ is distributed as a geometric random variable parameterized by $p$: $Y \sim \text{Geom}(p)$. We know that the pmf of $Y$ is $f_Y(y) = P(Y = y) = (1-p)^{y-1}p, y = 1,2,...$. In other words, $P(Y = y)$ is the product of the probability of $y-1$ failures and the probability of one success.

Let's calculate the expected value of $Y$ using the summation equation we've used previously (take the result on faith):

Now we are going to use double expectation and a standard one-step conditioning argument to compute $E[Y]$. First, let's define $X = 1$ if the first flip is $H$ and $X = 0$ otherwise. Let's pretend that we have knowledge of the first flip. We don't really have this knowledge, but we do know that the first flip can either be heads or tails: $P(X = 1) = p, P(X = 0) = 1 - p$.

Let's remember the double expectation formula:

What are the $x$-values? $X$ can only equal $0$ or $1$, so:

Now, if $X= 0$, the first flip was tails, and I have to start counting all over again. The expected number of flips I have to make before I see heads is $E[Y]$. However, I have already flipped once, and I flipped tails: that's what $X = 0$ means. So, the expected number of flips I need, given that I already flipped tails is $1 + E[Y]$: $P(Y|X=0) = 1 + E[Y]$ What is $P(0)$? It's just $1 - p$. Thus:

Now, if $X = 1$, the first flip was heads. I won! Given that $X = 1$, the expected value of $Y$ is one. If I know that I flipped heads on the first try, the expected number of trials before I flip heads is that one trial: $P(Y|X=1) = 1$. What is $P(1)$? It's just $p$. Thus:

Let's solve for $E[Y]$:

Computing Probabilities by Conditioning

Let $A$ be some event. We define the random variable $Y=1$ if $A$ occurs, and $Y = 0$ otherwise. We refer to $Y$ as an [indicator function]([https://en.wikipedia.org/wiki/Indicator_function](https://en.wikipedia.org/wiki/Indicator_function)) of $A$; that is, the value of $Y$ indicates the occurrence of $A$. The expected value of $Y$ is given by:

Let's enumerate the $y$-values:

What is $P(Y = 1)$? Well, $Y = 1$ when $A$ occurs, so $P(Y = 1) = P(A) = E[Y]$. Indeed, the expected value of an indicator function is the probability of the corresponding event.

Similarly, for any random variable, $X$, we have:

If we enumerate the $y$-values, we have:

Since we know that $f(Y = 1) = P(A)$, then:

Let's look at an implication of the above result. By definition:

Using LOTUS:

Since we saw that $E[Y|X=x] = P(A|X=x)$, then:

Theorem

The result above implies that, if $X$ and $Y$ are independent, continuous random variables, then:

To prove, let $A = {Y < X}$. Then:

Substitute $A = {Y < X}$:

What's $P(Y < X|X=x)$? In other words, for a given $X = x$, what's the probability that $Y < X$? That's a long way of saying $P(Y < x)$:

Example

Suppose we have two random variables, $X \sim \text{Exp}(\mu)$ and $Y \sim \text{Exp}(\lambda)$. Then:

Note that $P(Y < x)$ is the cdf of $Y$ at $x$: $F_Y(x)$. Thus:

Since $X$ and $Y$ are both exponentially distributed, we know that they have the following pdf and cdf, by definition:

Let's substitute these values in, adjusting the limits of integration appropriately:

Let's rearrange:

Let $u_1 = -\mu x$. Then $du_1 = -\mu dx$. Let $u_2 = -\lambda x - \mu x$. Then $du_2 = -(\lambda + \mu)dx$. Thus:

Now we can integrate:

As it turns out, this result makes sense because $X$ and $Y$ correspond to arrivals from a [poisson process]([https://en.wikipedia.org/wiki/Poisson_point_process](https://en.wikipedia.org/wiki/Poisson_point_process)) and $\mu$ and $\lambda$ are the arrival rates. For example, suppose that $X$ corresponds to arrival times for women to a store, and $Y$ corresponds to arrival times for men. If women are coming in at a rate of three per hour - $\lambda = 3$ - and men are coming in at a rate of nine per hour - $\mu = 9$ - then the probability of a woman arriving before a man is going to be $3/4$.

Variance Decomposition

Just as we can use double expectation for the expected value of $Y$, we can express the variance of $Y$, $\text{Var}(Y)$ in a similar fashion, which we refer to as variance decomposition:

Proof

Let's start with the first term: $E[\text{Var}(Y|X)]$. Remember the definition of variance, as the second central moment:

Thus, we can express $E[\text{Var}(Y|X)]$ as:

Note that, since expectation is linear:

Notice the first expression on the right-hand side. That's a double expectation, and we know how to simplify that:

Now let's look at the second term in the variance decomposition: $\text{Var}[E(Y|X)]$. Considering again the definition for variance above, we can transform this term:

In this equation, we again see a double expectation, quantity squared. So:

Remember the equation for variance decomposition:

Let's plug in $1$ and $2$ for the first and second term, respectively:

Notice the cancellation of the two scary inner terms to reveal the definition for variance:

Covariance and Correlation

In this lesson, we are going to talk about independence, covariance, correlation, and some related results. Correlation shows up all over the place in simulation, from inputs to outputs to everywhere in between.

LOTUS in 2D

Suppose that $h(X,Y)$ is some function of two random variables, $X$ and $Y$. Then, via LOTUS, we know how to calculate the expected value, $E[h(X,Y)]$:

Expected Value, Variance of Sum

Whether or not $X$ and $Y$ are independent, the sum of the expected values equals the expected value of the sum:

If $X$ and $Y$ are independent, then the sum of the variances equals the variance of the sum:

Note that we need the equations for LOTUS in two dimensions to prove both of these theorems.

Aside: I tried to prove these theorems. It went terribly! Check out the proper proofs [here]([http://www.milefoot.com/math/stat/rv-sums.htm](http://www.milefoot.com/math/stat/rv-sums.htm)).

Random Sample

Let's suppose we have a set of $n$ random variables: $X_1,...,X_n$. This set is said to form a random sample from the pmf/pdf $f(x)$ if all the variables are (i) independent and (ii) each $X_i$ has the same pdf/pmf $f(x)$.

We can use the following notation to refer to such a random sample:

Note that "iid" means "independent and identically distributed", which is what (i) and (ii) mean, respectively, in our definition above.

Theorem

Given a random sample, $X_1,...,X_n \overset{\text{iid}}{\sim} f(x)$, the sample mean, $\bar{X_n}$ equals the following:

Given the sample mean, the expected value of the sample mean is the expected value of any of the individual variables, and the variance of the sample mean is the variance of any of the individual variables divided by $n$:

We can observe that as $n$ increases, $E[\bar{X_n}]$ is unaffected, but $\text{Var}(\bar{X_n})$ decreases.

Covariance

Covariance is one of the most fundamental measures of non-independence between two random variables. The covariance between $X$ and $Y$, $\text{Cov}(X, Y)$ is defined as:

The right-hand side of this equation looks daunting, so let's see if we can simplify it. We can first expand the product:

Since expectation is linear, we can rewrite the right-hand side as a difference of expected values:

Note that both $E[X]$ and $E[Y]$ are just numbers: the expected values of the corresponding random variables. As a result, we can apply two principles here: $E[aX] = aE[X]$ and $E[a] = a$. Consider the following rearrangement:

The last three terms are the same, they and sum to $-E[Y]E[X]$. Thus:

This equation is much easier to work with; namely, $h(X,Y) = XY$ is a much simpler function than $h(X,Y) = (X-E[X])(Y - E[Y])$ when it comes time to apply LOTUS.

Let's understand what happens when we take the covariance of $X$ with itself:

Theorem

If $X$ and $Y$ are independent random variables, then $\text{Cov}(X, Y) = 0$. On the other hand, a covariance of $0$ does not mean that $X$ and $Y$ are independent.

For example, consider two random variables, $X \sim \text{Unif}(-1,1)$ and $Y = X^2$. Since $Y$ is a function of $X$, the two random variables are dependent: if you know $X$, you know $Y$. However, take a look at the covariance:

What is $E[X]$? Well, we can integrate the pdf from $-1$ to $1$, or we can understand that the expected value of a uniform random variable is the average of the bounds of the distribution. That's a long way of saying that $E[X] =(-1 + 1) / 2 = 0$.

Now, what is $E[X^3]$? We can apply LOTUS:

What is the pdf of a uniform random variable? By definition, it's one over the difference of the bounds:

Let's integrate and evaluate:

Thus:

Just because the covariance between $X$ and $Y$ is $0$ does not mean that they are independent!

More Theorems

Suppose that we have two random variables, $X$ and $Y$, as well as two constants, $a$ and $b$. We have the following theorem:

Whether or not $X$ and $Y$ are independent,

Note that we looked at a theorem previously which gave an equation for the variance of $X + Y$ when both variables are independent: $\text{Var}(X + Y) = \text{Var}(X) + \text{Var}(Y)$. That equation was a special case of the theorem above, where $\text{Cov}(X,Y) = 0$ as is the case between two independent random variables.

Correlation

The correlation between $X$ and $Y$, $\rho$, is equal to:

Note that correlation is standardized covariance. In other words, for any $X$ and $Y$, $-1 \leq \rho \leq 1$.

If two variables are highly correlated, then $\rho$ will be close to $1$. If two variables are highly negatively correlated, then $\rho$ will be close to $-1$. Two variables with low correlation will have a $\rho$ close to $0$.

Example

Consider the following joint pmf:

For this pmf, $X$ can take values in ${2, 3, 4}$ and $Y$ can take values in ${40, 50, 60}$. Note the marginal pmfs along the table's right and bottom, and remember that all pmfs sum to one when calculated over all appropriate values.

What is the expected value of $X$? Let's use $f_X(x)$:

Now let's calculate the variance:

What is the expected value of $Y$? Let's use $f_Y(y)$:

Now let's calculate the variance:

If we want to calculate the covariance of $X$ and $Y$, we need to know $E[XY]$, which we can calculate using two-dimensional LOTUS:

With $E[XY]$ in hand, we can calculate the covariance of $X$ and $Y$:

Finally, we can calculate the correlation:

Portfolio Example

Let's look at two different assets, $S1$ and $S_2$, that we hold in our portfolio. The expected yearly returns of the assets are $E[S_1] = \mu_1$ and $E[S_2] = \mu_2$, and the variances are $\text{Var}(S_1) = \sigma_1^2$ and $\text{Var}(S_2) = \sigma_2^2$. The covariance between the assets is $\sigma{12}$.

A portfolio is just a weighted combination of assets, and we can define our portfolio, $P$, as:

The portfolio's expected value is the sum of the expected values of the assets times their corresponding weights:

Let's calculate the variance of the portfolio:

Remember how we express $\text{Var}(X + Y)$:

Remember that $\text{Var}(aX) = a^2\text{Var}(X)$ and $\text{Cov}(aX, bY) = ab\text{Cov}(X,Y)$. Thus:

Finally, let's substitute in the appropriate variables:

How might we optimize this portfolio? One thing we might want to optimize for is minimal variance: many people want their portfolios to have as little volatility as possible.

Let's recap. Given a function $f(x)$, how do we find the $x$ that minimizes $f(x)$? We can take the derivative, $f'(x)$, set it to $0$ and then solve for $x$. Let's apply this logic to $\text{Var}(P)$. First, we take the derivative with respect to $w$:

Then, we set the derivative equal to $0$ and solve for $w$:

Example

Suppose $E[S_1] = 0.2$, $E[S_2] = 0.1$, $\text{Var}(S_1) = 0.2$, $\text{Var}(S_2) = 0.4$, and $\text{Cov}(S_1, S_2) = -0.1$.

What value of $w$ maximizes the expected return of this portfolio? We don't even have to do any math: just allocate 100% of the portfolio to the asset with the higher expected return - $S_1$. Since we define our portfolio as $wS_1 + (1 - w)S_2$, the correct value for $w$ is $1$.

What value of $w$ minimizes the variance? Let's plug and chug:

To minimize variance, we should hold a portfolio consisting of $5/8$ $S_1$ and $3/8$ $S_2$.

There are tradeoffs in any optimization. For example, optimizing for maximal expected return may introduce high levels of volatility into the portfolio. Conversely, optimizing for minimal variance may result in paltry returns.

Probability Distributions

In this lesson, we are going to review several popular discrete and continuous distributions.

Bernoulli (Discrete)

Suppose we have a random variable, $X \sim \text{Bernoulli}(p)$. $X$ has the following pmf:

Additionally, $X$ has the following properties:

Binomial (Discrete)

The Bernoulli distribution generalizes to the binomial distribution. Suppose we have $n$ iid Bernoulli random variables: $X_1,X_2,...,X_n \overset{\text{iid}}\sim \text{Bern}(p)$. Each $X_i$ takes on the value $1$ with probability $p$ and $0$ with probability $1-p$. If we take the sum of the successes, we have the following random variable, $Y$:

$Y$ has the following pmf:

Notice the binomial coefficient in this equation. We read this as "n choose k", which is defined as:

What's going on here? First, what is the probability of $y$ successes? Well, completely, it's the probability of $y$ successes and $n-y$ failures: $p^yq^{n-y}$. Of course, the outcome of $y$ consecutive successes followed by $n-y$ consecutive failures is just one particular arrangement of many. How many? $n$ choose $k$. This is what the binomial coefficient expresses.

Additionally, $Y$ has the following properties:

Note that the variance and the expected value are equal to $n$ times the variance and the expected value of the Bernoulli random variable. This relationship makes sense: a binomial random variable is the sum of $n$ Bernoulli's. The moment-generating function looks a little bit different. As it turns out, we multiply the moment-generating functions when we sum the random variables.

Geometric (Discrete)

Suppose we have a random variable, $X \sim \text{Geometric}(p)$. A geometric random variable corresponds to the number of $\text{Bern}(p)$ trials until a success occurs. For example, three failures followed by a success ("FFFS") implies that $X = 4$. A geometric random variable has the following pmf:

We can see that this equation directly corresponds to the probability of $x - 1$ failures, each with probability $q$ followed by one success, with probability $p$.

Additionally, $X$ has the following properties:

Negative Binomial (Discrete)

The geometric distribution generalizes to the negative binomial distribution. Suppose that we are interested in the number of trials it takes to see $r$ successes. We can add $r$ iid $\text{Geom}(p)$ random variables to get the random variable $Y \sim \text{NegBin}(r, p)$. For example, if $r = 3$, then a run of "FFFSSFS" implies that $Y \sim \text{NegBin}(3, p) = 7$. $Y$ has the following pmf:

Additionally, $Y$ has the following properties:

Note that the variance and the expected value are equal to $r$ times the variance and the expected value of the geometric random variable. This relationship makes sense: a negative binomial random variable is the sum of $r$ geometric random variables.

Poisson (Discrete)

A counting process, $N(t)$ keeps track of the number of "arrivals" observed between time $0$ and time $t$. For example, if $7$ people show up to a store by time $t=3$, then $N(3) = 7$. A Poisson process is a counting process that satisfies the following criteria.

Arrivals must occur one-at-a-time at a rate, $\lambda$. For example, $\lambda = 4 / \text{hr}$ means that, on average, arrivals occur every fifteen minutes, yet no two arrivals coincide.

Disjoint time increments are independent. Suppose we are looking at arrivals on the intervals 12 am - 2 am and 5 am - 10 am. Independent increments means that the arrivals in the first interval don't impact arrivals in the second.

Increments are stationary; in other words, the distribution of the number of arrivals in the interval $[s, s + t]$ depends only on the interval's length, $t$. It does not depend on where the interval starts, $s$.

A random variable $X \sim \text{Pois}(\lambda)$ describes the number of arrivals that a Poisson process experiences in one time unit, i.e., $N(1)$. $X$ has the following pmf:

Additionally, $X$ has the following properties:

Uniform (Continuous)

A uniform random variable, $X \sim \text{Uniform}(a,b)$, has the following pdf:

Additionally, $X$ has the following properties:

Exponential (Continuous)

A continuous, exponential random variable $X \sim \text{Exponential}(\lambda)$ has the following pdf:

Additionally, $X$ has the following properties:

The exponential distribution also has a memoryless property, which means that for $s, t > 0$, $P(X > s + t | X > s) = P(X > t)$. For example, if we have a light bulb, and we know that it has lived for $s$ time units, the conditional probability that it will live for $s + t$ time units (an additional $t$ units), is the unconditional probability that it will live for $t$ time units. Analogously, there is no "memory" of the prior $s$ time units.

Let's look at a concrete example. If $X \sim \text{Exp}(1/100)$, then:

Gamma (Continuous)

Recall the gamma function, $\Gamma(\alpha)$:

A gamma random variable, $X \sim \text{Gamma}(\alpha, \lambda)$, has the following pdf:

Additionally, $X$ has the following properties:

Note what happens when $\alpha = 1$: the gamma random variable reduces to an exponential random variable. Another way to say this is that the gamma distribution generalizes the exponential distribution.

If $X_1, X_2,...,X_n \overset{\text{iid}}{\sim} \text{Exp}(\lambda)$, then we can express a new random variable, $Y$:

The $\text{Gamma}(n,\lambda)$ distribution is also called the $\text{Erlang}_n(\lambda)$ distribution, and has the following cdf:

Triangular (Continuous)

Suppose that we have a random variable, $X \sim \text{Triangular}(a,b,c)$. The triangular distribution is parameterized by three fields - the smallest possible observation, $a$, the "most likely" observation, $b$, and the largest possible observation, $c$ - and is useful for models with limited data. $X$ has the following pdf:

Additionally, $X$ has the following property:

Beta (Continuous)

Suppose we have a random variable, $X \sim \text{Beta}(a,b)$. $X$ has the following pdf:

Additionally, $X$ has the following properties:

Normal (Continuous)

Suppose we have a random variable, $X \sim \text{Normal}(\mu, \sigma^2)$. $X$ has the following pdf, which, when graphed, produces the famous bell curve:

Additionally, $X$ has the following properties:

Theorem

If $X \sim \text{Nor}(\mu, \sigma^2)$, then a linear function of $X$ is also normal: $aX + b \sim \text{Nor}\left(a\mu + b, a^2 \sigma^2\right)$. Interestingly, if we set $a = 1 / \sigma$ and $b = -\mu / \sigma$, then $Z = aX + b$ is:

$Z$ is drawn from the standard normal distribution, which has the following pdf:

This distribution also has the cdf $\Phi(z)$, which is tabled. For example, $\Phi(1.96) \doteq 0.975$.

Theorem

If $X1$ and $X_2$ are _independent with $X_i \sim \text{Nor}(\mu_i,\sigma_i^2), i = 1,2$, then $X_1 + X_2 \sim \text{Nor}(\mu_1 + \mu_2, \sigma_1^2 + \sigma_2^2)$.

For example, suppose that we have two random variables, $X \sim \text{Nor}(3,4)$ and $Y \sim \text{Nor}(4,6)$ and $X$ and $Y$ are independent. How is $Z = 2X - 3Y + 1$ distributed? Let's first start with $2X$:

Now, let's find the distribution of $-3Y + 1$:

Finally, we combine the two distributions linearly:

Let's look at the corollary of a previous theorem. If $X_1, ..., X_n$ are iid $\text{Nor}(\mu, \sigma^2)$, then the sample mean $\bar{X}_n \sim \text{Nor}(\mu, \sigma^2 / n)$. While the sample mean has the same mean as the distribution from which the observations were sampled, its variance has decreased by a factor of $n$. As we get more information, the sample mean becomes less variable. This observation is a special case of the law of large numbers, which states that $\bar{X}_n$ approximates $\mu$ as $n$ becomes large.

Limit Theorems

In this lesson, we are going to see what happens when we sample a large number of iid random variables. As we will see, normality happens, and, in particular, we will be looking at the central limit theorem.

Corollary (of a previous theorem)

If $X_1,..., X_n$ are iid $\text{Nor}(\mu, \sigma^2)$, then the sample mean, $\bar{X}_n$, is distributed as $\text{Nor}(\mu, \sigma^2 / n)$. In other words:

Notice that $\bar{X}$ has the same mean as the distribution from which the observations were sampled, but the variance decreases as a factor of the number of samples, $n$. Said another way, the variability of $\bar{X}$ decreases as $n$ increases, driving $\bar{X}$ toward $\mu$.

As we said before, this observation is a special case of the law of large numbers, which states that $\bar{X}$ approximates $\mu$ as $n$ becomes large.

Definition

Suppose we have a sequence of random variables, $Y1, Y_2,...$, with respective cdf's, $F{Y1}(y), F{Y2}(y),....$ This series of random variables converges in distribution to the random variable $Y$ having cdf $F_Y(y)$ if $\lim{n \to \infty}F_{Y_n}(y) = F_Y(y)$ for all $y$. We express this idea of converging in distribution with the following notation:

How do we use this? Well, if $Y_n \overset{d}{\to} Y$, and $n$ is large, then we can approximate the distribution of $Y_n$ by the limit distribution of $Y$.

Central Limit Theorem

Suppose $X_1, X_2,...,X_n$ are iid random variables sampled from some distribution with pdf/pmf $f(x)$ having mean $\mu$ and variance $\sigma^2$. Let's define a random variable $Z_n$:

We can simplify this expression. Let's first split the sum:

Let's work on the first term:

Note that the sum the random variables divided by $n$ equals $\bar{X_n}$:

Now, let's work on the second term, dividing $n$ by $\sqrt{n}$:

Let's combine the two terms:

The expression above converges in distribution to a $\text{Nor}(0, 1)$ distribution:

Thus, the cdf of $Z_n$ approaches $\phi(z)$ as $n$ increases.

The central limit theorem works well if the pdf/pmf is fairly symmetric and the number of samples, $n$, is greater than fifteen.

Example

Suppose that we have $100$ observations, $X1, X_2,...,X{100} \overset{\text{iid}}{\sim} \text{Exp}(1)$. Note that, with $\lambda = 1$, $\mu = \sigma^2 = 1$. What is the probability that the sum of all $100$ random variables falls between $90$ and $100$:

We can use the central limit theorem to approximate this probability. Let's apply $f(x) = (x - n\mu) / \sqrt{n}\sigma$ to the inequality, converting the sum of our observations to $Z_{100}$:

Since $Zn \overset{d}{\to} \text{Nor}(0,1)$, we can approximate that $Z{100} \sim \text{Nor}(0,1)$:

This final expression is asking for the probability that a $\text{Nor}(0,1)$ random variable falls between $-1$ and $1$. The standard normal distribution has a standard deviation of $1$, and we know that the probability of falling within one standard deviation of the mean is $0.6827 \approx 68\%$.

Now, remember that the sum of exponential random variables is itself an erlang random variable:

Erlang random variables have cdf's, which we can use - directly or through software such as Minitab - to obtain the exact value of the probability above:

Not bad.

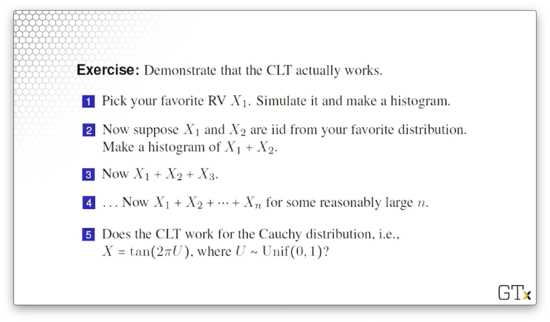

Exercises

Introduction to Estimation (OPTIONAL)

In this lesson, we are going to start our review of basic statistics. In particular, we are going to talk about unbiased estimation and mean squared error.

Statistic Definition

A statistic is a function of the observations $X_1,...,X_n$ that is not explicitly dependent on any unknown parameters. For example, the sample mean, $\bar{X}$, and the sample variance, $S^2$, are two statistics:

Statistics are random variables. In other words, if we take two different samples, we should expect to see two different values for a given statistic.

We usually use statistics to estimate some unknown parameter from the underlying probability distribution of the $X_i$'s. For instance, we use the sample mean, $\bar{X}$, to estimate the true mean, $\mu$, of the underlying distribution, which we won't normally know. If $\mu$ is the true mean, then we can take a bunch of samples and use $\bar{X}$ to estimate $\mu$. We know, via the law of large numbers that, as $n \to \infty$, $\bar{X} \to \mu$.

Point Estimator

Let's suppose that we have a collection of iid random variables, $X_1,...,X_n$. Let $T(\bold{X}) \equiv T(X_1,...,X_n)$ be a function that we can compute based only on the observations. Therefore, $T(\bold{X})$ is a statistic. If we use $T(\bold{X})$ to estimate some unknown parameter $\theta$, then $T(\bold{X})$ is known as a point estimator for $\theta$.

For example, $\bar{X}$ is usually a point estimator for the true mean, $\mu = E[X_i]$, and $S^2$ is often a point estimator for the true variance, $\sigma^2 = \text{Var}(X)$.

$T(\bold{X})$ should have specific properties:

Its expected value should equal the parameter it's trying to estimate. This property is known as unbiasedness.

It should have a low variance. It doesn't do us any good if $T(\bold{X})$ is bouncing around depending on the sample we take.

Unbiasedness

We say that $T(\bold{X})$ is unbiased for $\theta$ if $E[T(\bold{X})] = \theta$. For example, suppose that random variables, $X_1,...,X_n$ are iid anything with mean $\mu$. Then:

Since $E[\bar{X}] = \mu$, $\bar{X}$ is always unbiased for $\mu$. That's why we call it the sample mean.

Similarly, suppose we have random variables, $X_1,...,X_n$ which are iid $\text{Exp}(\lambda)$. Then, $\bar{X}$ is unbiased for $\mu = E[X_i] = 1 / \lambda$. Even though $\lambda$ is unknown, we know that $\bar{X}$ is a good estimator for $1/ \lambda$.

Be careful, though. Just because $\bar{X}$ is unbiased for $1 / \lambda$ does not mean that $1 / \bar{X}$ is unbiased for $\lambda$: $E[1/\bar{X}] \neq 1 /E[\bar{X}] = \lambda$. In fact, $1/\bar{X}$ is biased for $\lambda$ in this exponential case.

Here's another example. Suppose that random variables, $X_1,...,X_n$ are iid anything with mean $\mu$ and variance $\sigma^2$. Then:

Since $E[S^2] = \sigma^2$, $S^2$ is always unbiased for $\sigma^2$. That's why we called it the sample variance.

For example, suppose random variables $X_1,...,X_n$ are iid $\text{Exp}(\lambda)$. Then $S^2$ is unbiased for $\text{Var}(X_i) = 1 / \lambda^2$.

Let's give a proof for the unbiasedness of $S^2$ as an estimate for $\sigma^2$. First, let's convert $S^2$ into a better form:

Let's rearrange the middle sum:

Remember that $\bar{X}$ represents the average of all the $X_i$'s: $\sum X_i / n$. Thus, if we just sum the $X_i$'s and don't divide by $n$, we have a quantity equal to $n\bar{X}$:

Now, back to action:

Let's take the expected value:

Note that $E[X_i^2]$ is the same for all $X_i$, so the sum is just $nE[X_1^2]$:

We know that $\text{Var}(X) = E[X^2] - (E[X])^2$, so $E[X^2] = \text{Var}(X) + (E[X])^2$. Therefore:

Remember that $E[X_1] = E[\bar{X}]$, so:

Furthermore, remember that $\text{Var}(\bar{X}) = \text{Var}(X_1) /n = \sigma_2 / n$. Therefore:

Unfortunately, while $S^2$ is unbiased for the variance $\sigma^2$, $S$ is biased for the standard deviation $\sigma$.

Example

Suppose that we have $n$ random variables, $X_1,...,X_n \overset{\text{iid}}{\sim} \text{Unif}(0,\theta)$. We know that our observations have a lower bound of $0$, but we don't know the value of the upper bound, $\theta$. As is often the case, we sample many observations from the distribution and use that sample to estimate the unknown parameter.

Consider two estimators for $\theta$:

Let's look at the first estimator. We know that $E[Y_1] = 2E[\bar{X}]$, by definition. Similarly, we know that $2E[\bar{X}] = 2E[X_i]$, since $\bar{X}$ is always unbiased for the mean. Recall how we compute the expected value for a uniform random variable:

Therefore:

As we can see, $Y_1$ is unbiased for $\theta$.

It's also the case that $Y_2$ is unbiased, but it takes more work to demonstrate. As a first step, take the cdf of the maximum of the $X_i$'s, $M \equiv \max_iX_i$. Here's what $P(M \leq y)$ looks like:

If $M \leq y$, and $M$ is the maximum, then $P(M \leq y)$ is the probability that all the $X_i$'s are less than $y$. Since the $X_i$'s are independent, we can take the product of the individual probabilities:

Now, we know, by definition, that the cdf is the integral of the pdf. Remember that the pdf for a uniform distribution, $\text{Unif}(a,b)$, is:

Let's rewrite $P(M \leq y)$:

Again, we know that the pdf is the derivative of the cdf, so:

With the pdf in hand, we can get the expected value of $M$:

Note that $E[M] \neq \theta$, so $M$ is not an unbiased estimator for $\theta$. However, remember how we defined $Y_2$:

Thus:

Therefore, $Y_2$ is unbiased for $\theta$.

Both indicators are unbiased, so which is better? Let's compare variances now. After similar algebra, we see:

Since the variance of $Y_2$ involves dividing by $n^2$, while the variance of $Y_1$ only divides by $n$, $Y_2$ has a much lower variance than $Y_1$ and is, therefore, the better indicator.

Bias and Mean Squared Error

The bias of an estimator, $T[\bold{X}]$, is the difference between the estimator's expected value and the value of the parameter its trying to estimate: $\text{Bias}(T) \equiv E[T] - \theta$. When $E[T] = \theta$, then the bias is $0$ and the estimator is unbiased.

The mean squared error of an estimator, $T[\bold{X}]$, the expected value of the squared deviation of the estimator from the parameter: $\text{MSE}(T) \equiv E[(T-\theta)^2]$.

Remember the equation for variance:

Using this equation, we can rewrite $\text{MSE}(T)$:

Usually, we use mean squared error to evaluate estimators. As a result, when selecting between multiple estimators, we might not choose the unbiased estimator, so long as that estimator's MSE is the lowest among the options.

Relative Efficiency

The relative efficiency of one estimator, $T_1$, to another, $T_2$, is the ratio of the mean squared errors: $\text{MSE}(T_1) / \text{MSE}(T_2)$. If the relative efficiency is less than one, we want $T_1$; otherwise, we want $T_2$.

Let's compute the relative efficiency of the two estimators we used in the previous example:

Remember that both estimators are unbiased, so the bias is zero by definition. As a result, the mean squared errors of the two estimators is determined solely by the variance:

Let's calculate the relative efficiency:

The relative efficiency is greater than one for all $n > 1$, so $Y_2$ is the better estimator just about all the time.

Maximum Likelihood Estimation (OPTIONAL)

In this lesson, we are going to talk about maximum likelihood estimation, which is perhaps the most important point estimation method. It's a very flexible technique that many software packages use to help estimate parameters from various distributions.

Likelihood Function and Maximum Likelihood Estimator

Consider an iid random sample, $X_1,...,X_n$, where each $X_i$ has pdf/pmf $f(x)$. Additionally, suppose that $\theta$ is some unknown parameter from $X_i$ that we would like to estimate. We can define a likelihood function, $L(\theta)$ as:

The maximum likelihood estimator (MLE) of $\theta$ is the value of $\theta$ that maximizes $L(\theta)$. The MLE is a function of the $X_i$'s and is itself a random variable.

Example

Consider a random sample, $X_1,...,X_n \overset{\text{iid}}{\sim} \text{Exp}(\lambda)$. Find the MLE for $\lambda$. Note that, in this case, $\lambda$, is taking the place of the abstract parameter, $\theta$. Now:

We know that exponential random variables have the following pdf:

Therefore:

Let's expand the product:

We can pull out a $\lambda^n$ term:

Remember what happens to exponents when we multiply bases:

Let's apply this to our product (and we can swap in $\exp$ notation to make things easier to read):

Now, we need to maximize $L(\lambda)$ with respect to $\lambda$. We could take the derivative of $L(\lambda)$, but we can use a trick! Since the natural log function is one-to-one, the $\lambda$ that maximizes $L(\lambda)$ also maximizes $\ln(L(\lambda))$. Let's take the natural log of $L(\lambda)$:

Let's remember three different rules here:

Therefore:

Now, let's take the derivative:

Finally, we set the derivative equal to $0$ and solve for $\lambda$:

Thus, the maximum likelihood estimator for $\lambda$ is $1 / \bar{X}$, which makes a lot of sense. The mean of the exponential distribution is $1 / \lambda$, and we usually estimate that mean by $\bar{X}$. Since $\bar{X}$ is a good estimator for $\lambda$, it stands to reason that a good estimator for $\lambda$ is $1 / \bar{X}$.

Conventionally, we put a "hat" over the $\lambda$ that maximizes the likelihood function to indicate that it is the MLE. Such notation looks like this: $\hat{\lambda}$.

Note that we went from "little x's", $x_i$, to "big x", $\bar{X}$, in the equation. We do this to indicate that $\hat{\lambda}$ is a random variable.

Just to be careful, we probably should have performed a second-derivative test on the function, $\ln(L(\lambda))$, to ensure that we found a maximum likelihood estimator and not a minimum likelihood estimator.

Invariance Property of MLE's

If $\hat{\theta}$ is the MLE of some parameter, $\theta$, and $h(\cdot)$ is a 1:1 function, then $h(\hat{\theta})$ is the MLE of $h(\theta)$.

For example, suppose we have a random sample, $X_1,...,X_n \overset{\text{iid}}{\sim} \text{Exp}(\lambda)$. The survival function, $\bar{F}(x)$, is:

In addition, we saw that the MLE for $\lambda$ is $\hat{\lambda} = 1 / \bar{X}$. Therefore, using the invariance property, we can see that the MLE for $\bar{F}(x)$ is $\bar{F}(\hat{\lambda})$:

Confidence Intervals (OPTIONAL)

In this lesson, we are going to expand on the idea of point estimators for parameters and look at confidence intervals. We will use confidence intervals through this course, especially when we look at output analysis.

Important Distributions

Suppose we have a random sample, $Z1, Z_2,...,Z_k$, that is iid $\text{Nor}(0,1)$. Then, $Y = \sum{i=1}^k Zi^2$ has the $\chi^2$ distribution with $k$ [degrees of freedom](https://en.wikipedia.org/wiki/Degrees_of_freedom(statistics)). We can express $Y$ as $Y \sim \chi^2(k)$, and $Y$ has an expected value of $k$ and a variance of $2k$.

If $Z \sim \text{Nor}(0,1), Y \sim \chi^2{k}$, and $Z$ and $Y$ are independent, then $T = Z / \sqrt{Y /k}$ has the Student's t distribution with $k$ degrees of freedom. We can express $T$ as $T \sim t(k)$. Note that $t(1)$ is the Cauchy distribution.

If $Y_1 \sim \chi^2(m), Y_2 \sim \chi^2(n)$, and $Y_1$ and $Y_2$ are independent, then $F = (Y_1 /m)/(Y_2/n)$ has the F distribution with $m$ and $n$ degrees of freedom. Notation: $F \sim F(m,n)$.

We can use these distributions to construct confidence intervals for $\mu$ and $\sigma^2$ under a variety of assumptions.

Confidence Interval

A $100(1-\alpha)\%$ two-sided confidence interval for an unknown parameter, $\theta$, is a random interval, $[L, U]$, such that $P(L \leq \theta \leq U) = 1 - \alpha$.

Here is an example of a confidence interval. We might say that we believe that the proportion of people voting for some candidate is between 47% and 51%, and we believe that statement to be true with probability 0.95.

Common Confidence Intervals

Suppose that we know the variance of a distribution, $\sigma^2$. Then a $100(1-\alpha)\%$ confidence interval for $\mu$ is:

Notice the structure of the inequality here: $\bar{X}_n - H \leq \mu \leq \bar{X}_n + H$. The expression $H$, known as the half-length, is almost always a product of a constant and the square root of $S^2 /n$. In this rare case, since we know $\sigma^2$, we can use it directly.

What is $z$? $z_\gamma$ is the $1 - \gamma$ quantile of the standard normal distribution, which we can look up:

Let's look at another example. If $\sigma^2$ is unknown, then a $100(1-\alpha)\%$ confidence interval for $\mu$ is:

This example looks very similar to the previous example except that we are using $S^2$ (because we don't know $\sigma^2$), and we are using a $t$ quantile instead of a normal quantile. Note that $t_{\gamma, \nu}$ is the $1 - \gamma$ quantile of the $t(\nu)$ distribution.

Finally, let's look at the $100(1-\alpha)\%$ confidence interval for $\sigma^2$:

Note that $\chi^2_{\gamma, \nu}$ is the $1 - \gamma$ quantile of the $\chi^2(\nu)$ distribution.

Example

We are going to look at a sample of 20 residual flame times, in seconds, of treated specimens of children's nightwear, where a residual flame time measures how long it takes for something to burst into flames. Don't worry; there were no children in the nightwear during this experiment.

Here's the data:

Let's compute a $95\%$ confidence interval for the mean residual flame time. After performing the necessary algebra, we can calculate the following statistics:

Remember the equation for computing confidence intervals for $\mu$ when $\sigma^2$ is unknown:

Since we are constructing a $95\%$ confidence interval, $\alpha = 0.05$ Additionally, since we have 20 samples, $n = 20$. Let's plug in:

Now, we need to look up the $1-\gamma = 1 - 0.025 = 0.975$ quantile for the $t(19)$ distribution. I found this table, which gives $t_{0.025,19} = 2.093$. Thus:

After the final arithmetic, we see that the confidence interval is:

We would say that we are 95% confident that the true mean, $\mu$, (of the underlying distribution from which our $20$ observations were sampled) is between $9.8029$ and $9.8921$.

Last updated